Kur pirkti

ASBIS tiekia didelį asortimentą IT produktų savo klientams Lietuvoje. Aplankykite skilti kur pirkti ir sužinokite kur yra artimiausia parduotuvė

ASBIS naujienos

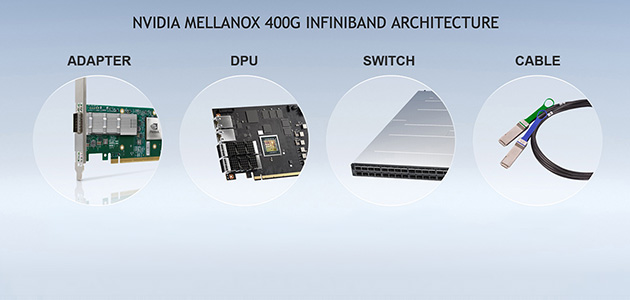

NVIDIA Announces Mellanox InfiniBand for Exascale AI Supercomputing

Global Ecosystem of Server and Storage Partners to Offer Systems with NVIDIA Mellanox 400G, World’s Only Fully In-Network Acceleration Platform

NVIDIA introduced the next generation of NVIDIA® Mellanox® 400G InfiniBand, giving AI developers and scientific researchers the fastest networking performance available to take on the world’s most challenging problems.

As computing requirements continue to grow exponentially in areas such as drug discovery, climate research and genomics, NVIDIA Mellanox 400G InfiniBand is accelerating this work through a dramatic leap in performance offered on the world’s only fully offloadable, in-network computing platform.

The seventh generation of Mellanox InfiniBand provides ultra-low latency and doubles data throughput with NDR 400Gb/s and adds new NVIDIA In-Network Computing engines to provide additional acceleration.

The world’s leading infrastructure manufacturers — including Atos, Dell Technologies, Fujitsu, GIGABYTE, Inspur, Lenovo and Supermicro — plan to integrate NVIDIA Mellanox 400G InfiniBand into their enterprise solutions and HPC offerings. These commitments are complemented by extensive support from leading storage infrastructure partners including DDN and IBM Storage, among others.

“The most important work of our customers is based on AI and increasingly complex applications that demand faster, smarter, more scalable networks,” said Gilad Shainer, senior vice president of networking at NVIDIA. “The NVIDIA Mellanox 400G InfiniBand’s massive throughput and smart acceleration engines let HPC, AI and hyperscale cloud infrastructures achieve unmatched performance with less cost and complexity.”

The announcement builds on Mellanox InfiniBand’s lead as the industry’s most robust solution for AI supercomputing. The NVIDIA Mellanox NDR 400G InfiniBand offers 3x the switch port density and boosts AI acceleration power by 32x. In addition, it surges switch system aggregated bi-directional throughput 5x, to 1.64 petabits per second, enabling users to run larger workloads with fewer constraints.

Product Specifications and Availability

Offloading operations is crucial for AI workloads. The third-generation NVIDIA Mellanox SHARP technology allows deep learning training operations to be offloaded and accelerated by the InfiniBand network, resulting in 32x higher AI acceleration power. When combined with NVIDIA Magnum IO™ software stack, it provides out-of-the-box accelerated scientific computing.

Edge switches, based on the Mellanox InfiniBand architecture, carry an aggregated bi-directional throughput of 51.2Tb/s, with a landmark capacity of more than 66.5 billion packets per second. The modular switches, based on Mellanox InfiniBand, will carry up to an aggregated bi-directional throughput of 1.64 petabits per second, 5x higher than the last generation.

The Mellanox InfiniBand architecture is based on industry standards to ensure backwards and future compatibility and protect data center investments. Solutions based on the architecture are expected to sample in the second quarter of 2021.

Learn more about NVIDIA Mellanox InfiniBand by contacting vad@asbis.com

Disclaimer:: The information contained in each press release posted on this site was factually accurate on the date it was issued. While these press releases and other materials remain on the Company's website, the Company assumes no duty to update the information to reflect subsequent developments. Consequently, readers of the press releases and other materials should not rely upon the information as current or accurate after their issuance dates.